Top Guys AI

The Antivirus for AI

AI systems are powerful and vulnerable. TopGuys.AI protects your business from AI misuse, data leaks, and model attacks before they happen.

AI is the new attack surface

From prompt injections to data poisoning, AI systems are being hacked, manipulated, and exploited.

Businesses can’t rely on legacy cybersecurity tools, they weren’t built for AI threats.

SMBs are rushing to adopt AI tools, but:

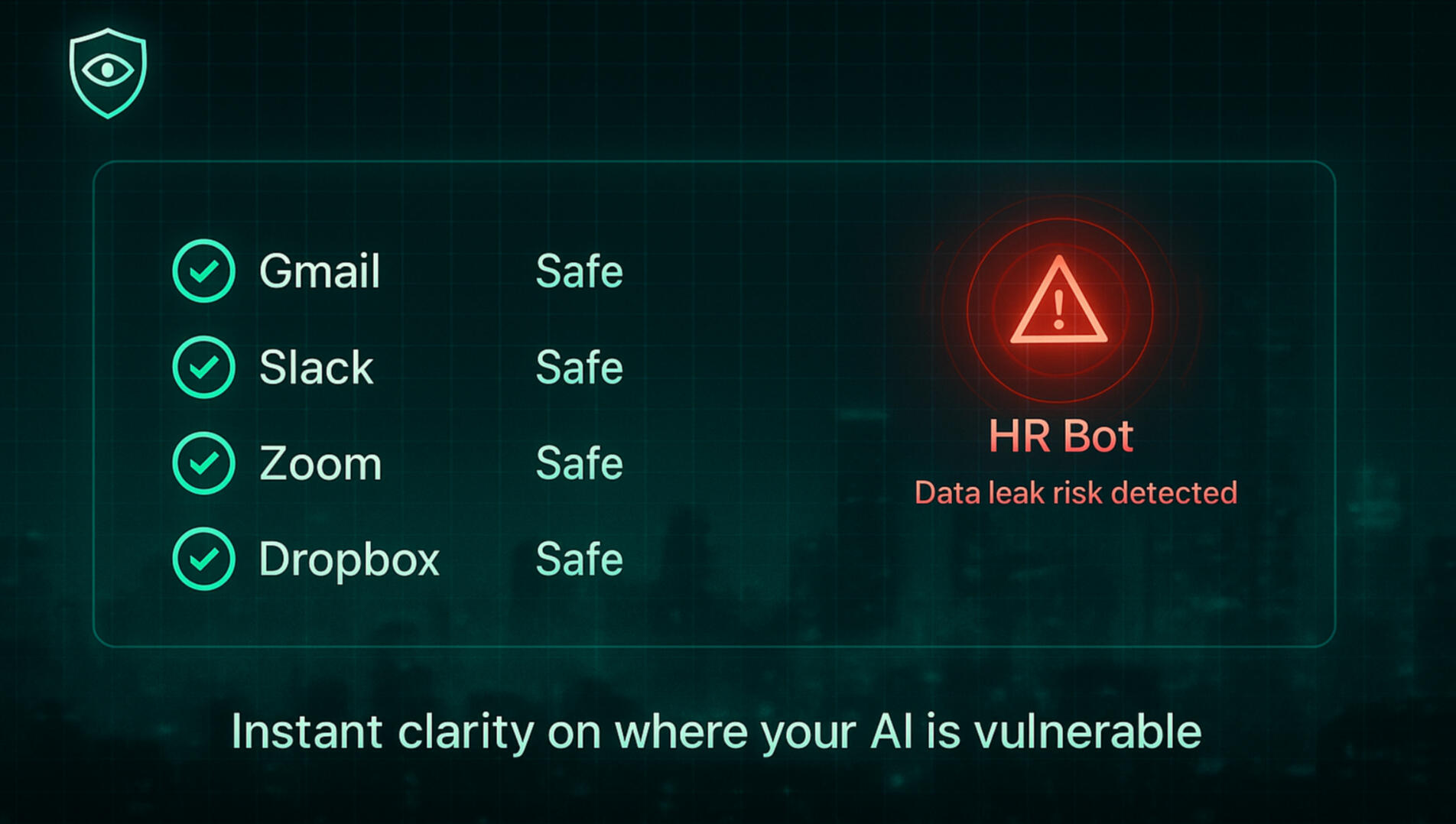

⚠️ Data Leaks: Sensitive info (employee SSNs, contracts, medical data) fed into LLMs.

⚠️ Compliance Risks: AI outputs may violate HIPAA, GDPR, labor laws.

⚠️ Bad Outputs: Biased, offensive, or hallucinated responses.

⚠️ No Audit Trail: SMBs can’t prove what AI did if regulators/auditors ask.

🔒 Data Guardrails: Prevent sensitive info leaks.

📜 Compliance Filters: HIPAA, GDPR, labor law checks built-in.

🧾 Audit Trail: Every AI action logged & timestamped.

🚫 Content Safety: Blocks unsafe or risky AI responses.

🔐 Privacy & Encryption: Protects all SMB data at rest + in use.

🌍 Multi-Assistant Coverage: Works across all AI tools, starting with your 11 assistants.

Meet your AI firewall

3 Pillars:

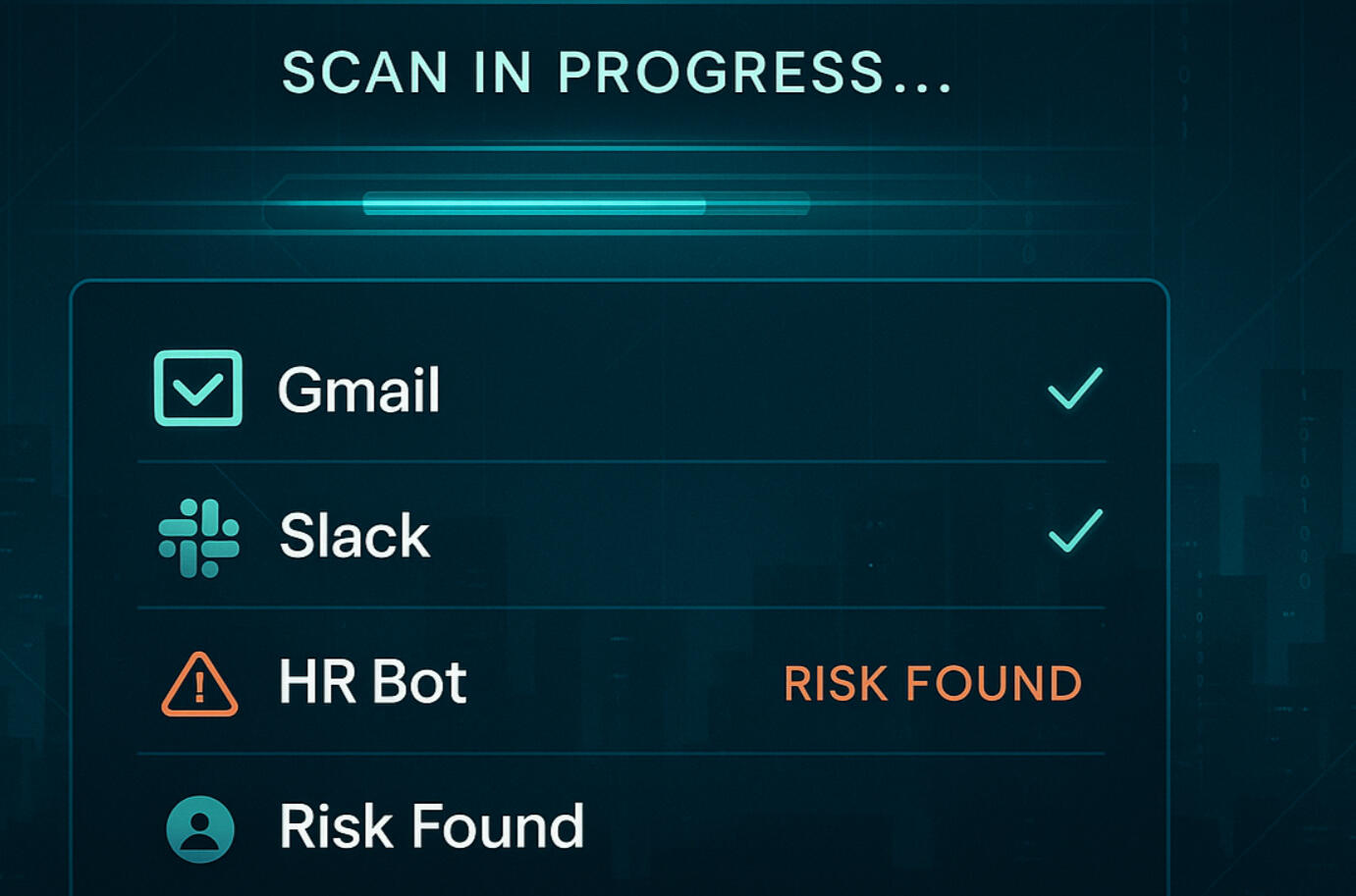

🧱 Detect — Monitors every interaction for malicious intent.

🛡 Defend — Automatically isolates and blocks harmful behavior.

📊 Report — Real-time visibility into model integrity and risk exposure.

Always on. Always learning. Always protecting.